Side Projects

Last Updated 09/2022

Multi-agent reinforcement learning using coordination graphs

In this project (2021), we made a tutorial and blog post based on Sheng's work on multi-agent reinforcement learning using graph-based attention on a coordination graph of interacting agents. Blog Post | Tutorial Notebook | Sheng's Paper

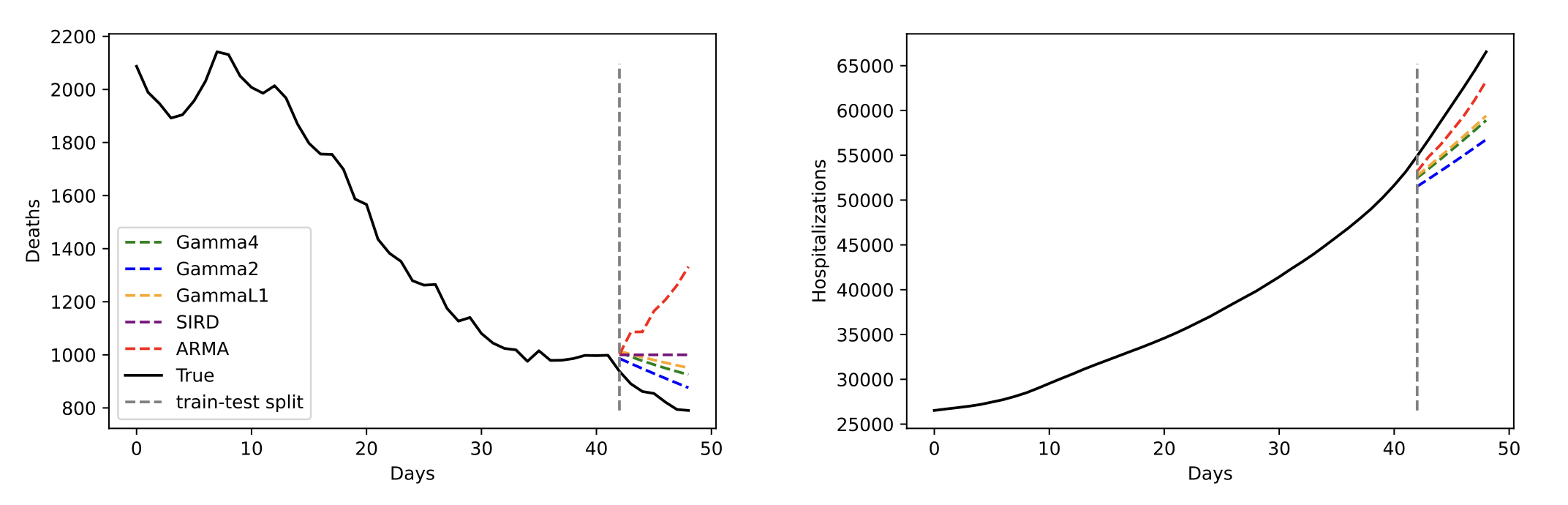

Covid-19 infection prediction modeling

In this project (2021), we posited our own (convex) models to predict COVID-19 infections. We compared our models to regularized autogregressive moving average (also trained through convex optimization), along with a (non-convex) SIRD model trained through Certainty-Equivalent Expectation-Maximization. We found that our models were (non-surprisingly) much faster to learn and all models were comparably poor at predicting COVID-19 infections. Turns out COVID-19 is quite hard to forecast. Paper | Code

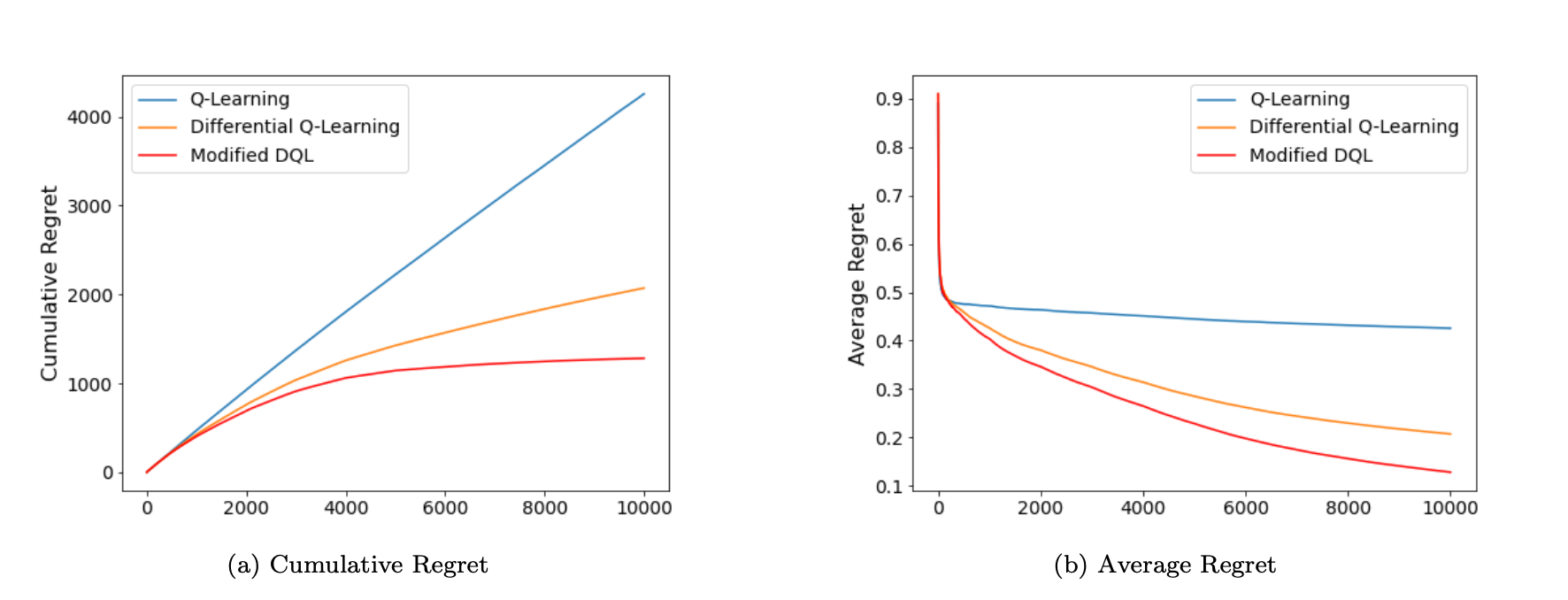

Modified differential Q-Learning

In this project (2021), we looked at differential Q-learning, a variant of Q-learning which overcomes the need to introduce an artificial discount factor by keeping track of average reward and differentials instead of cumulative reward. We suggested a modification that kept track of additional differentials over state partitions, showing that if you had a problem where reward was amenable to state partitioning and did so smartly, you could learn better policies more quickly. Paper

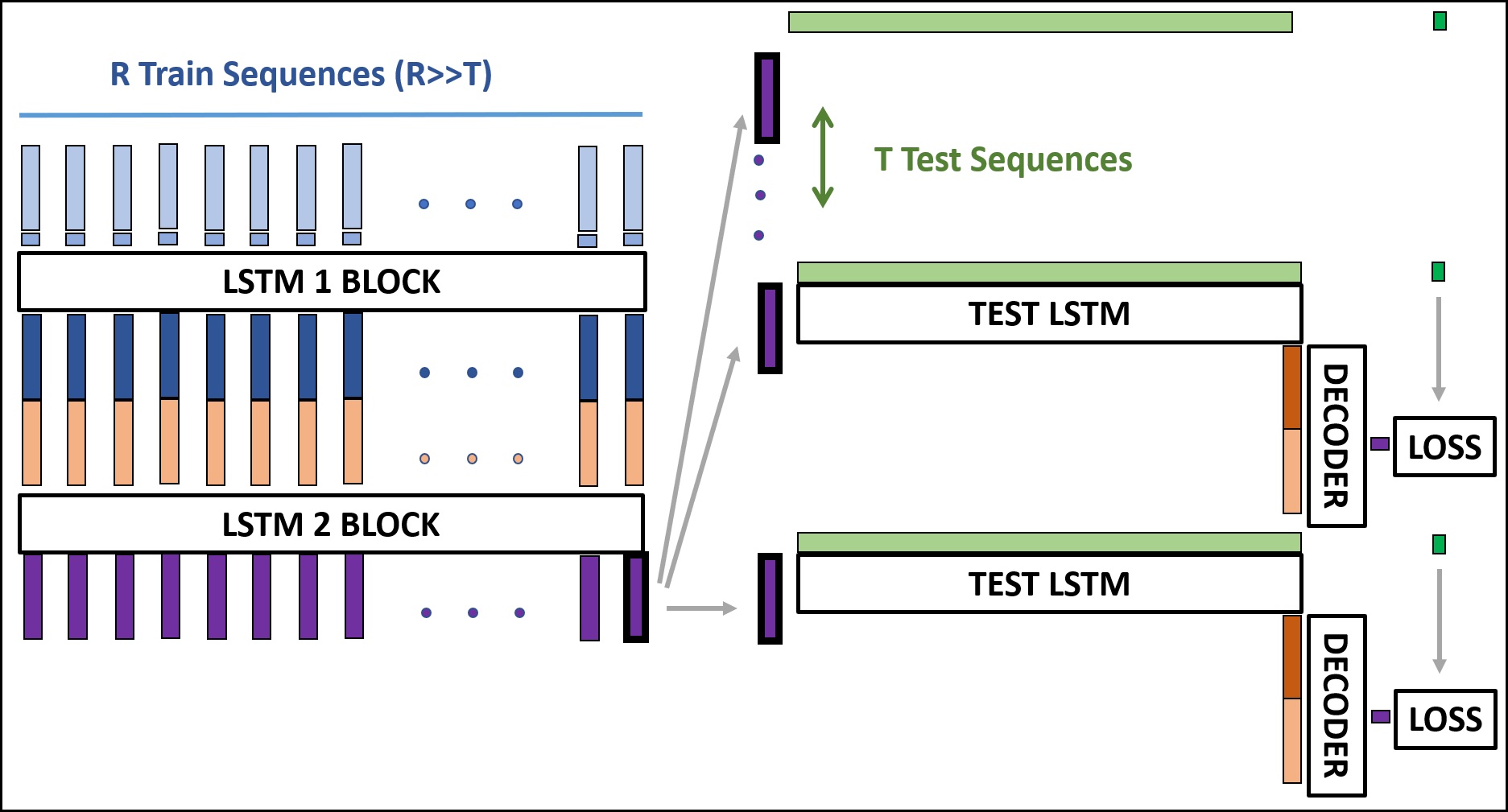

Meta black-box time-series forecasting with external covariates

In this project (2020), we tried to see if we could meta-learn how to make time-series predictions of electricity demand at new locations given little data at those locations (few-shot), but a lot of data at related locations. We put forward a method called TSMANN that built off of Memory Augmented Neural Networks but applied specifically to time-series forecasting with external covariates (e.g. weather information). Paper

Hyperparameter tuning through Expected Improvement exploration

In this project (2020), we wrote a library to do automatic hyperparameter tuning using Bayesian optimization with Expected Improvement exploration. There are better methods to do this nowadays, e.g. Tree Parzen estimators, or BOHB. Paper | Code

Continuous action EV charge scheduling through Monte Carlo Tree Search

In this project (2020), I tried to use Monte Carlo Tree Search to schedule electric vehicle charging given different probabilistic models of external electricity demand and non-convex charging dynamics. I handled the large continuous action and space spaces by using double progressive widening. I also compared using linear, feedforward neural network, and recurrent neural network models to do the single-step electricity demand prediction, while propagating appropriate information down through the tree search. I think now, the most efficient way to solve this problem may be using multi-forecast (scenario-based) MPC with sample-efficient methods to do the non-convex optimization, e.g. MPPI or CMA-ES. Paper

Bayesian probabilistic driver modeling

In this project (2020), we developed a probabilistic driver prediction model based on real highway data for vehicles changing lanes. We captured different methods for capturing epistemic uncertainty, including probabilistic feedforward and recurrent neural networks, RNNs trained with Bayes by Backprop, and Bayesian RNNs with posterior sharpening. Paper | Code

GaussianFilters.jl

We made a Julia library (2019) that implements a Kalman, Extended Kalman, and Unscented Kalman Filters with automatic differentiation to calulate dynamic and observation function Jacobians automatically. We also implemented the Probability Hypothesis Density filter for multi-target object tracking in cluttered environments. Paper on the PHD filter | Code

3D neural style transfer

In this project (2019), we attempted to apply Neural Style Transfer to images with depth channels, with the hopes of being able to blend the style transfer with the depth to make some cool pictures. We had some mixed success, a big problem being style itself is often 2D and doesn't blend well with depth. We still had some pretty cool pictures come out, and there are definitely better ways to do this nowadays. Paper